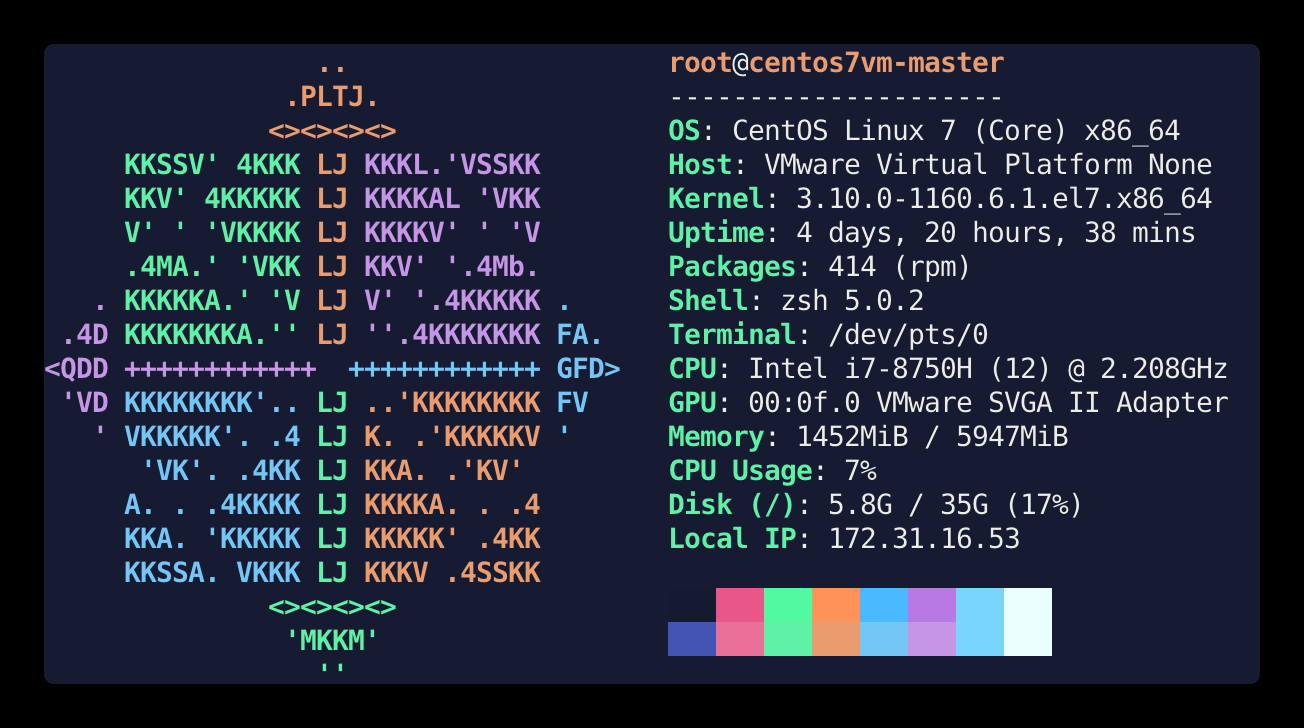

云端 (Cloud Side)

此处安装云端的环境是 VMWare 安装 CentOS 7 虚拟机,登录用户为 root 用户

安装 Kubernetes

修改 Hostname

vim /etc/hostname # 修改 hostname

vim /etc/hosts # 将本机 IP 指向 hostname确认 MAC 地址 和 product_uuid 唯一

ifconfig -a

cat /sys/class/dmi/id/product_uuid关闭防火墙

systemctl stop firewalld

systemctl disable firewalld禁用 SELinux

vim /etc/selinux/config 修改其中的 SELINUX=disabled

禁用 SWAP

vim /etc/fstab注释掉 swap:

#/dev/mapper/centos-swap swap swap defaults 0 0使桥接流量经过 iptables

确定内核模块 br_netfilter 已经加载

lsmod | grep br_netfilter如果命令没有输出,使用下面的命令加载

modprobe br_netfilter并新建 /etc/modules-load.d/k8s.conf 文件加入下面的内容使 br_netfilter 模块在启动时自动加载

br_netfilter创建 sysctl 配置

vim /etc/sysctl.d/k8s.conf加入下面两行内容:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1载入配置

sysctl --system准备工作完成后重启一次系统

reboot

安装 docker

此时可以设置代理服务器

export http_proxy=http://172.31.1.1:1080

export https_proxy=http://172.31.1.1:1080

export no_proxy=172.31.16.53 # 本机 IP安装 docker

yum update -y && yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum update -y && sudo yum install -y containerd.io-1.2.13 docker-ce-19.03.11 docker-ce-cli-19.03.11docker 和 containerd 要使用 官方文档 指定的版本

配置 docker

mkdir /etc/docker

vim /etc/docker/daemon.json加入以下内容:

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}mkdir -p /etc/systemd/system/docker.service.d

systemctl daemon-reload

systemctl enable docker

systemctl restart docker安装 kubeadm 、 kubelet 和 kubectl

配置源

vim /etc/yum.repos.d/kubernetes.repo加入以下内容:

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectlyum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable kubelet && systemctl start kubelet初始化 Master 节点

生成初始化文件

kubeadm config print init-defaults > kubeadm-init.yaml该文件有两处需要修改:

将 advertiseAddress: 1.2.3.4 修改为本机地址

将 imageRepository: k8s.gcr.io 修改为 imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

修改完成后的文件如下:

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.31.16.53

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: centos7vm-master

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}取消代理服务器

export http_proxy=

export https_proxy=下载镜像

kubeadm config images pull --config kubeadm-init.yaml执行初始化

kubeadm init --config kubeadm-init.yaml执行成功后,会输出以下内容:

Your Kubernetes control-plane has initialized successfully!

......

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.31.16.53:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c8aad7fdc9faa6dddc7d29dfd8af633afe996e07cad2e32617abc358faae4f63最后两行需要保存下来, kubeadm join 是 worker 节点加入所需要执行的命令。

因为我们是 root 用户,执行以下命令,也可以加入 Shell 配置中。KUBECONFIG 即是 Kubernetes 的配置文件,之后安装 KubeEdge 也会用到。

export KUBECONFIG=/etc/kubernetes/admin.conf简单测试

此处的 NotReady 是因为网络还没配置。

$ kubectl get node

NAME STATUS ROLES AGE VERSION

centos7vm-master NotReady control-plane,master 5d21h v1.20.0安装网络插件

网络插件有很多选择,这里使用 Calico

wget https://docs.projectcalico.org/manifests/calico.yaml

vim calico.yaml搜索 CALICO_IPV4POOL_CIDR ,修改 value 为 10.96.0.0/12

在 DaemonSet 的配置下,添加选择器,只对云端安装 Calico,因为 KubeEdge 目前 (1.6.1) 还不支持 CNI 插件,这部分修改后如下:

···

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: calico-node

spec:

nodeSelector:

kubernetes.io/os: linux

kubernetes.io/hostname: kubeedge-cloud <-- 这里改成云端的主机名

···应用网络配置

kubectl apply -f calico.yaml等待一小段时间,然后查看 node 信息, master 的状态已经是 Ready 了

$ kubectl get node

NAME STATUS ROLES AGE VERSION

centos7vm-master Ready control-plane,master 5d21h v1.20.0安装 Dashoard

部署 Dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml部署完毕后,执行 kubectl get pods --all-namespaces 查看 pods 状态

$ kubectl get pods --all-namespaces | grep dashboard

kubernetes-dashboard dashboard-metrics-scraper-7b59f7d4df-jjpvt 1/1 Running 1 5d1h

kubernetes-dashboard kubernetes-dashboard-74d688b6bc-x7fqm 1/1 Running 2 5d1h创建用户

创建一个用于登录 Dashboard 的用户

vim dashboard-adminuser.yaml加入以下内容:

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-systemkubectl apply -f dashboard-adminuser.yaml修改 dashboard 服务

kubectl edit service kubernetes-dashboard -n kubernetes-dashboard修改 type: ClusterIP 为 type: NodePort ,并在 ports 下增加 nodePort: 30443

获取 Token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')可以将 Token 保存下来便于以后登录使用。

登录 dashboard

访问 https://172.31.16.53:30443 ,选择使用 Token 登录,粘贴刚才得到的 Token 即可进入 Dashboard。

安装 KubeEdge

wget https://github.com/kubeedge/kubeedge/releases/download/v1.6.1/keadm-v1.6.1-linux-amd64.tar.gz

tar -xvf keadm-v1.6.1-linux-amd64.tar.gz && cd keadm-v1.6.1-linux-amd64/keadm

./keadm init --kube-config=/etc/kubernetes/admin.conf --advertise-address="172.31.16.53"因为 KubeEdge 默认不可以与 kube-proxy 同时使用,所以要修改 kube-proxy 的配置,使其不对边缘端生效

kubectl edit daemonsets.apps -n kube-system kube-proxy修改后如下:

···

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kube-proxy

template:

metadata:

creationTimestamp: null

labels:

k8s-app: kube-proxy

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/edge

operator: DoesNotExist

containers:

···添加 Cloudcore 为系统服务

vim /etc/systemd/system/cloudcore.service添加以下内容:

[Unit]

Description=cloudcore.service

[Service]

Type=simple

ExecStart=/usr/local/bin/cloudcore

Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.target启动服务

systemctl daemon-reload && systemctl enable cloudcore

killall cloudcore

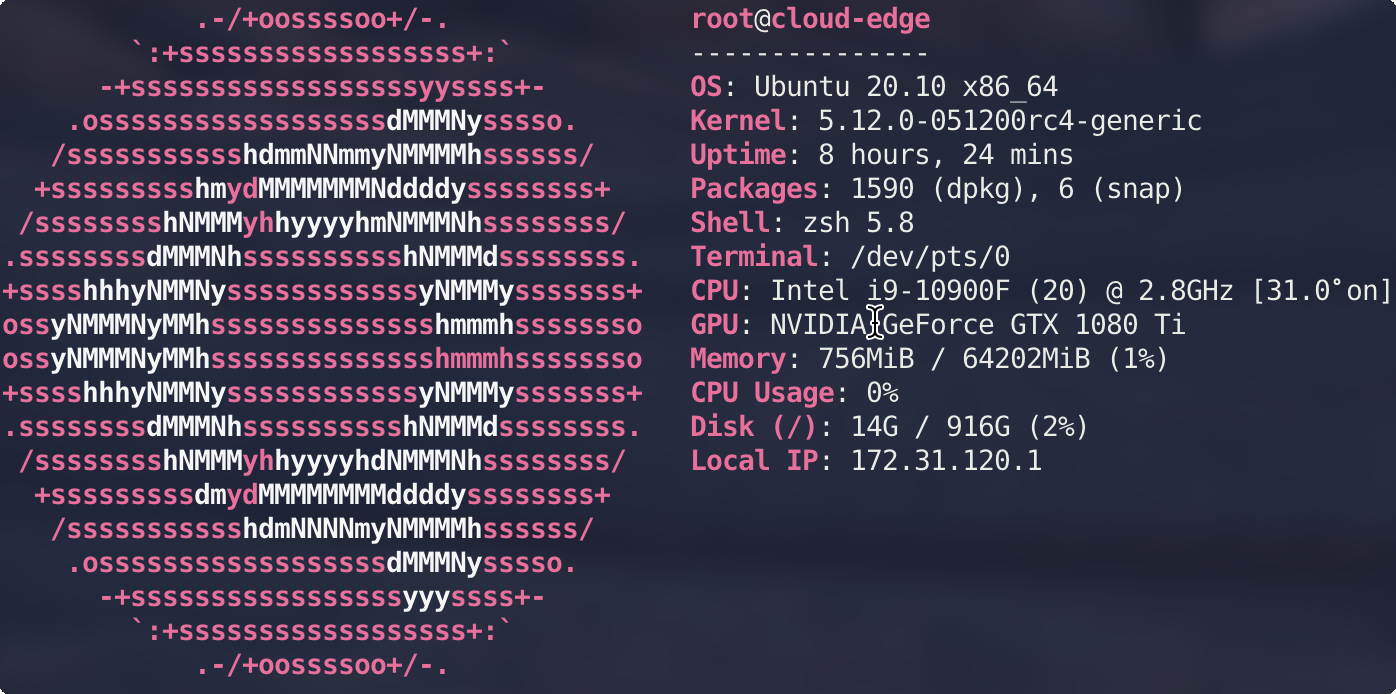

systemctl start cloudcore边缘端 (Edge Side)

安装 docker

apt update && apt install apt-transport-https ca-certificates curl gnupg

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo "deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu focal stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

apt update

apt install containerd.io=1.2.13-2 docker-ce=5:19.03.11\~3-0\~ubuntu-focal docker-ce-cli=5:19.03.11\~3-0\~ubuntu-focal获得 Token

首先在云端获得边缘端用于加入的 Token,在云端执行

./keadm gettoken --kube-config=/etc/kubernetes/admin.conf安装 KubeEdge

在边缘节点下载并解压

wget https://github.com/kubeedge/kubeedge/releases/download/v1.6.1/keadm-v1.6.1-linux-amd64.tar.gz

tar -xvf keadm-v1.6.1-linux-amd64.tar.gz && cd keadm-v1.6.1-linux-amd64/keadm加入云端

根据之前得到的 Token 在边缘节点执行加入操作

./keadm join --cloudcore-ipport=172.31.16.53:10000 --token=27a37ef16159f7d3be8fae95d588b79b3adaaf92727b72659eb89758c66ffda2.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE1OTAyMTYwNzd9.JBj8LLYWXwbbvHKffJBpPd5CyxqapRQYDIXtFZErgYE开启 kubectl logs 功能

在云端设置 CLOUDCOREIPS 变量为云端 IP

export CLOUDCOREIPS="172.31.16.53"为云端的 CloudStream 生成证书,下载并运行脚本

cd /etc/kubeedge

wget https://github.com/kubeedge/kubeedge/raw/master/build/tools/certgen.sh

chmod +x certgen.sh

./certgen.sh stream设置 iptables

iptables -t nat -A OUTPUT -p tcp --dport 10350 -j DNAT --to $CLOUDCOREIPS:10003修改 cloudcore.yaml 启用 cloudStream

vim /etc/kubeedge/config/cloudcore.yaml修改后如下:

cloudStream:

enable: true

streamPort: 10003

tlsStreamCAFile: /etc/kubeedge/ca/streamCA.crt

tlsStreamCertFile: /etc/kubeedge/certs/stream.crt

tlsStreamPrivateKeyFile: /etc/kubeedge/certs/stream.key

tlsTunnelCAFile: /etc/kubeedge/ca/rootCA.crt

tlsTunnelCertFile: /etc/kubeedge/certs/server.crt

tlsTunnelPrivateKeyFile: /etc/kubeedge/certs/server.key

tunnelPort: 10004在边缘端,修改 edgecore.yaml 启用 cloudStream

vim /etc/kubeedge/config/edgecore.yaml修改后如下:

edgeStream:

enable: true

handshakeTimeout: 30

readDeadline: 15

server: 172.31.16.53:10004

tlsTunnelCAFile: /etc/kubeedge/ca/rootCA.crt

tlsTunnelCertFile: /etc/kubeedge/certs/server.crt

tlsTunnelPrivateKeyFile: /etc/kubeedge/certs/server.key

writeDeadline: 15重启云端

pkill cloudcore

nohup cloudcore > cloudcore.log 2>&1 &重启边缘端

systemctl restart edgecore.service安装 Metrics-server

设置 iptables

iptables -t nat -A OUTPUT -p tcp --dport 10350 -j DNAT --to $CLOUDCOREIPS:10003下载配置文件

wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml修改 components.yaml ,修改后的 Deployment 部分如下:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/master

operator: Exists

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port # 确保有此项

- --kubelet-insecure-tls # 确保有此项

image: k8s.gcr.io/metrics-server/metrics-server:v0.4.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

periodSeconds: 10

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

hostNetwork: true # 新添加设置 docker 代理

vim /etc/systemd/system/docker.service.d/proxy.conf内容如下:

[Service]

Environment="HTTP_PROXY=http://127.0.0.1:1080"

Environment="HTTPS_PROXY=http://127.0.0.1:1080"

Environment="NO_PROXY=10.0.0.0/8,127.0.0.0/8,172.16.0.0/12,9.0.0.0/8,192.168.0.0/16"重启 docker 服务

systemctl daemon-reload

systemctl restart docker应用 components.yaml

kubectl apply -f components.yaml